Enhancing LLM Capabilities with Custom Functions: A Simple Flight Ticket AI

As Large Language Models (LLMs) continue to evolve, one of the most powerful ways to extend their capabilities is by integrating them with custom functions. In this post, I'll walk through a practical example of how to feed your own functions into both OpenAI and local Ollama models to retrieve flight ticket prices based on simple custom functions - demonstrating the flexibility and potential of function-calling in modern AI systems.

Understanding Function Calling in LLMs

Function calling allows language models to interact with external code, effectively bridging the gap between natural language processing and programmatic actions. By defining functions with specific signatures and descriptions, we can enable LLMs to:

- Recognize when a user request requires specific data

- Call the appropriate function with the correct parameters

- Present the results in a natural, conversational way

This capability is particularly valuable when dealing with real-time data or specialized information that isn't part of the model's training.

Creating Custom Flight Information Functions

For this demonstration, I created two Python functions that simulate flight information retrieval:

Basic Flight Information Function

The first function provides flight options based solely on the destination:

ticket_prices = {"london": "$799", "paris": "$899", "singapore": "$3500", "new york": "$4000"}

def get_ticket_price(destination_city):

print(f"Tool get_ticket_price called for {destination_city}")

city = destination_city.lower()

return ticket_prices.get(city, "Unknown")Basic function to return ticket prices based on destination

Advanced Flight Information Function

The second function is more sophisticated, allowing users to specify both destination and timing preferences:

# Define time slots

TIME_SLOTS = {

"morning": "06:00-12:00",

"afternoon": "12:00-18:00",

"evening": "18:00-24:00",

"night": "00:00-06:00"

}

# Define base prices and time-based multipliers

ticket_prices = {

"london": {

"base_price": 799,

"time_multipliers": {

"morning": 1.2, # 20% more expensive

"afternoon": 1.0, # standard price

"evening": 0.8, # 20% cheaper

"night": 0.6 # 40% cheaper

}

},

"paris": {

"base_price": 899,

"time_multipliers": {

"morning": 1.1,

"afternoon": 1.0,

"evening": 0.9,

"night": 0.7

}

},

"singapore": {

"base_price": 3500,

"time_multipliers": {

"morning": 1.1,

"afternoon": 1.0,

"evening": 0.9,

"night": 0.7

}

},

"new york": {

"base_price": 4000,

"time_multipliers": {

"morning": 1.1,

"afternoon": 1.0,

"evening": 0.9,

"night": 0.7

}

}

}Advanced function to return ticket information based on destination and time

And below are is the system prompt that I used to let LLMs know about the existence of these functions.

system_message = "You are a helpful assistant for an Airline called FlightAI. "

system_message += "Give short, courteous answers, no more than 1 sentence. "

system_message += "Always be accurate. If you don't know the answer, say so."

System Prompt

# description is important for LLM to understand the price function

price_function = {

"name": "get_ticket_price",

"description": "Get the price of a return ticket to the destination city. Call this whenever you need to know the ticket price, for example when a customer asks 'How much is a ticket to this city'",

"parameters": {

"type": "object",

"properties": {

"destination_city": {

"type": "string",

"description": "The city that the customer wants to travel to",

},

},

"required": ["destination_city"],

"additionalProperties": False

}

}# updated function for LLM

time_price_function = {

"name": "get_ticket_price_with_time",

"description": "Get the price of a return ticket to the destination city based on the time of flight. Call this whenever you need to know the ticket price for a specific time, for example when a customer asks 'How much is a morning flight to this city' or 'What are the prices for different times of day'",

"parameters": {

"type": "object",

"properties": {

"destination_city": {

"type": "string",

"description": "The city that the customer wants to travel to",

},

"time_slot": {

"type": "string",

"description": "The time slot for the flight (morning, afternoon, evening, or night)",

"enum": ["morning", "afternoon", "evening", "night"]

}

},

"required": ["destination_city", "time_slot"],

"additionalProperties": False

}

}Lastly, I have added this as part of Tools

tools = [{"type": "function", "function": price_function}, {"type": "function", "function": time_price_function}]

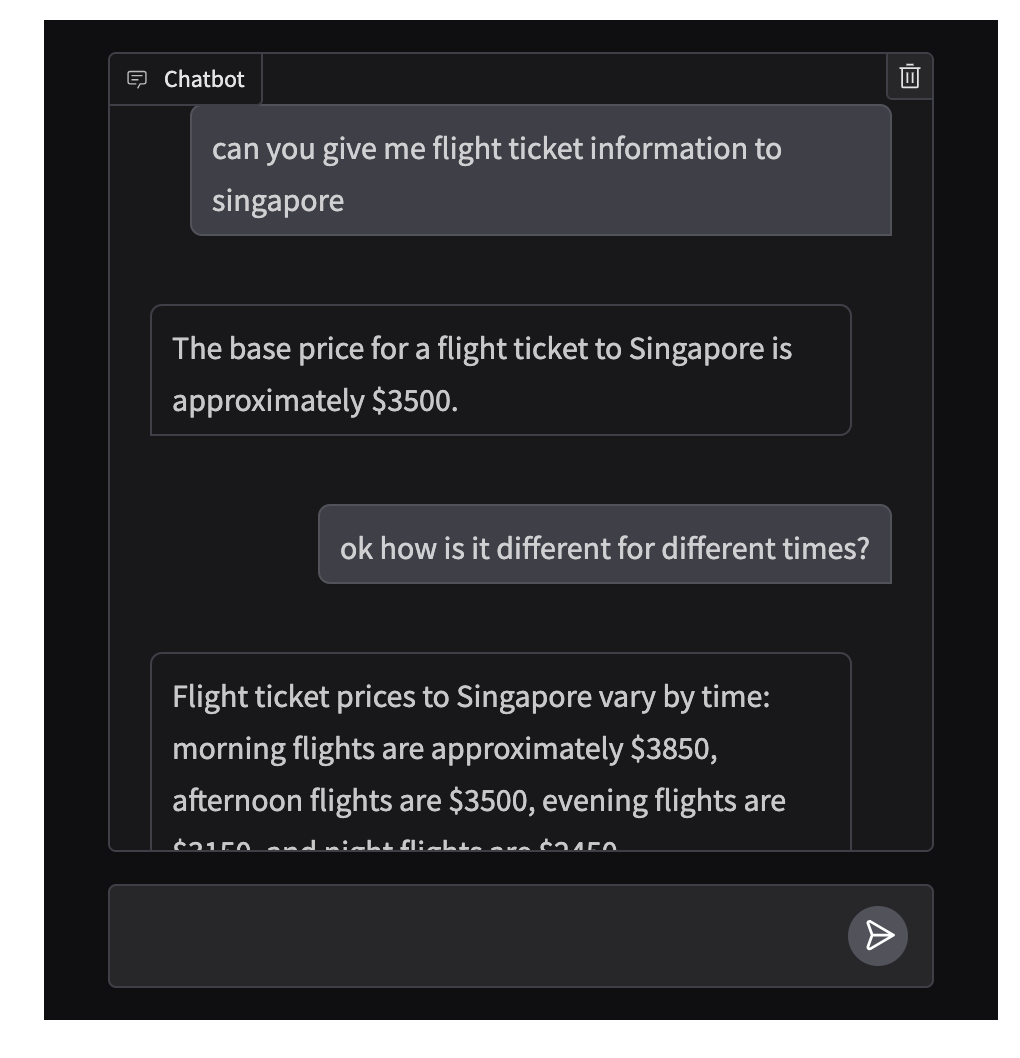

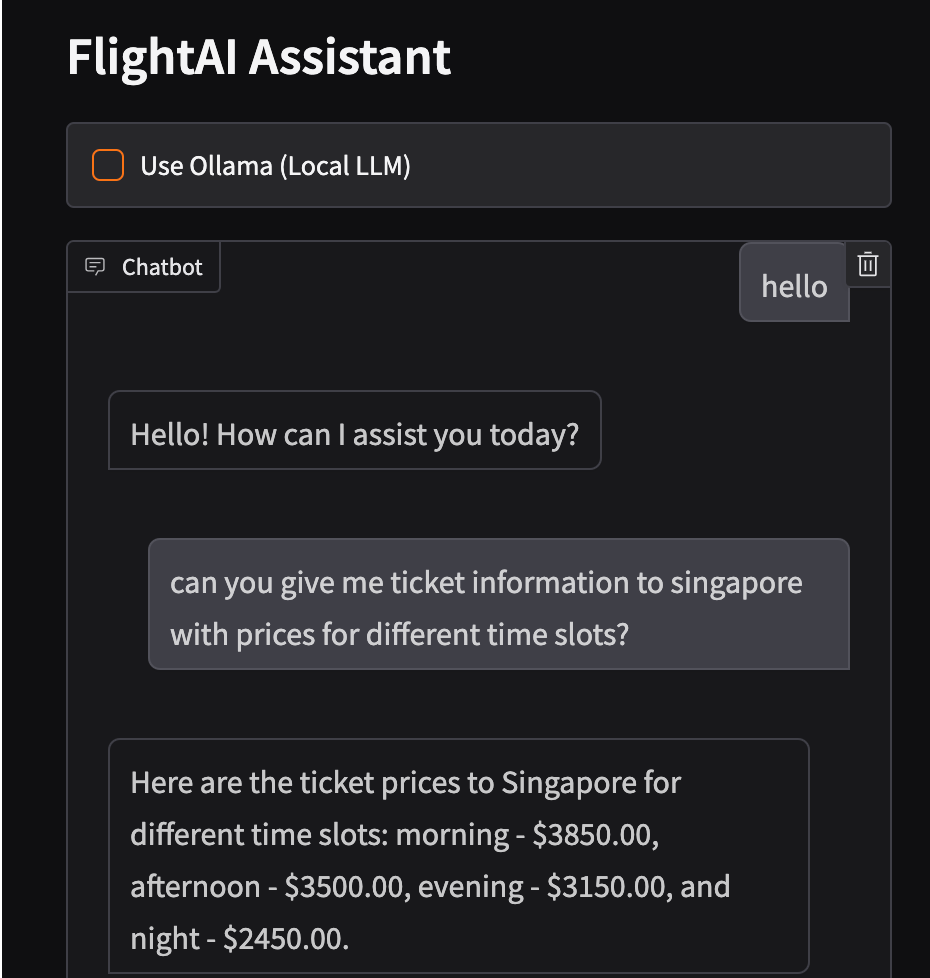

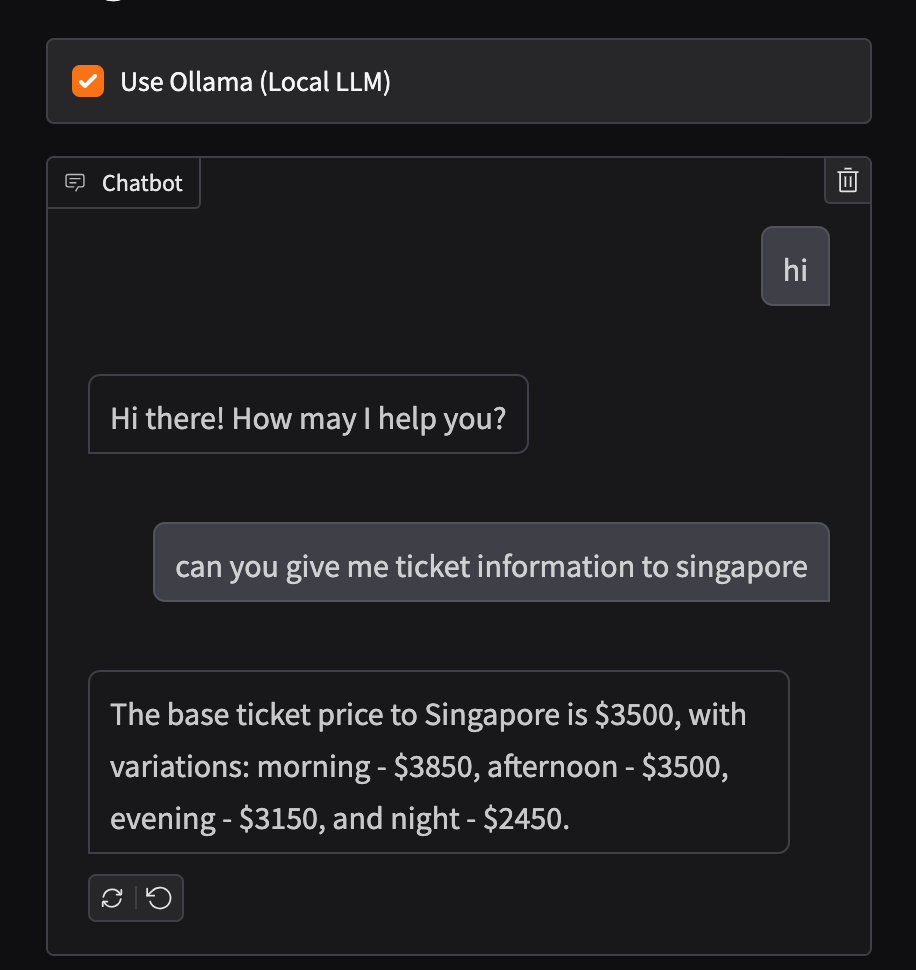

Then, I fed these functions into OpenAI (gpt-4o-mini) and Local LLM via Ollama (gemma3:1b).

The notebook demonstrates how the model correctly identifies when to call the function and how to parse the returned information to provide a helpful response.

One of the most interesting aspects of this project was implementing the same functionality with local Ollama models. While the implementation differs slightly, the core concept remains the same.

The responses are great, as I expected.

Results and Applications

The results were impressive with both model types. When asked questions like "What flights are available to Singapore?" or "Are there any morning flights to New York?", both the OpenAI and Ollama models correctly:

- Recognized the need to call the appropriate flight information function

- Extracted the correct parameters from the user query

- Called the function with those parameters

- Formatted the returned data into a natural, helpful response

This simple implementation demonstrates the potential for much more complex applications, such as:

- Booking systems that integrate with multiple APIs

- Customer service bots with access to real-time inventory or account information

- Educational tools that can run code examples or simulations on demand

Another advantage of adding such custom functions to LLMs is that you can avoid hallucination by explicitly telling LLM to find information only using your functions and saying "I don't know" if it cannot find information. In my example, since I hard-coded the destination names, if I ask for a destination that is not part of the function, the LLM will simply reply, saying, "I have no information on that."

Conclusion

Function-calling capabilities transform LLMs from purely conversational tools into systems that can take concrete actions and access external data sources. This simple example only scratches the surface of what's possible.

By enabling your LLM to call custom functions, you create a powerful bridge between natural language interfaces and programmatic functionality - opening up countless possibilities for practical applications in business, education, and beyond.

The full code implementation is available in my GitHub repository, where you can explore the details and adapt the approach for your own projects.