Comparing Local and OpenAI Models for Website Summarization

My Day 1 with Local and OpenAI Models for Website Summarization

I have recently started learning LLM Engineering course from Ed Donner. This is my very first time experimenting with language models for summarizing website content and I wanted to share what I’ve learned from this little day-one project. I played around with both local language models (LLMs) and OpenAI’s GPT models to see how they work, what they’re capable of, and how they could be applied in real-world business scenarios.

If you’re new to this space or just curious about how these tools can be used, this post will walk you through my discoveries and ideas for practical applications.

What I did

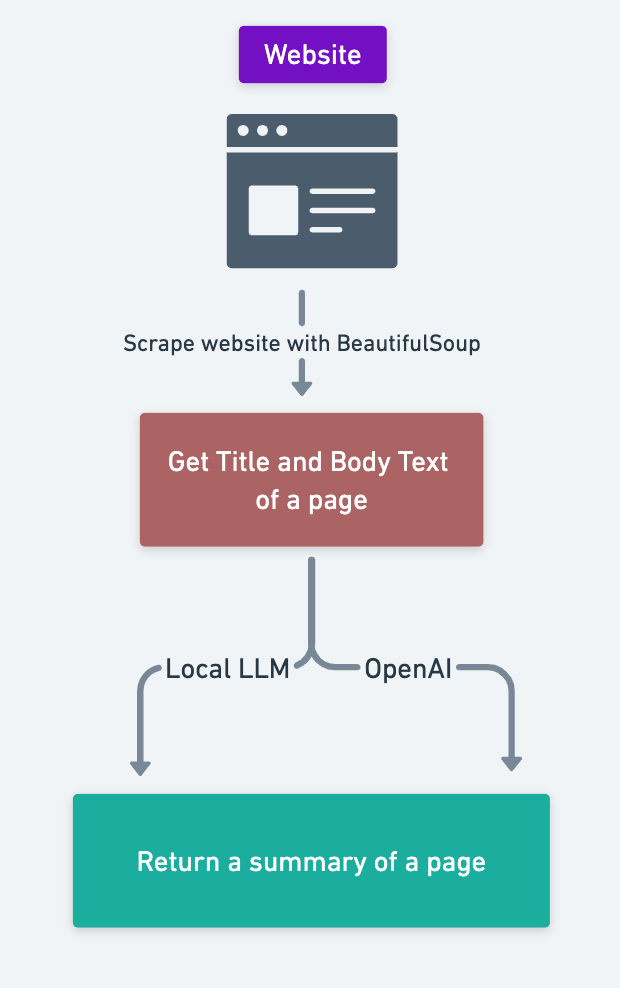

I started with a simple goal: take some website content and generate a concise summary of a page. To do this, I tried two approaches:

- Local LLMs: Models that run on your own hardware or servers. I used Ollama for this.

- OpenAI GPT Models: Cloud-based models accessed via an API.

I didn’t go too deep into fine-tuning or optimizing the models—this was more about getting a feel for what they can do right out of the box. The process involved feeding the same website content into both systems and observing the outputs.

Below is a simple architecture diagram of what it looks like.

What I Discovered

The entire Python notebook can be found here: https://github.com/zinkohlaing/llm_showcase/blob/main/LocalvsOpenAI_website_summary.ipynb

All credit goes to Ed's LLM Engineering Course materials which can be found here.

Here is what I observed while playing around with both OpenAI and local LLM models.

Both models are surprisingly easy to use

I was amazed at how straightforward it was to get started with both approaches. OpenAI’s API is particularly beginner-friendly—you send an HTTP request with your input text, and it returns a summary in seconds. Or you can use a Python library from OpenAI to make calls. All you have to do is to sign up and purchase some credits to use OpenAI APIs. For local models, setting up the environment took a bit more effort (installing libraries, configuring hardware), but once it was running, it felt just as intuitive. Ollama is really handy tool to have.

OpenAI feels more polished

The summaries generated by OpenAI’s GPT models were impressively fluent and context-aware, even for complex content. It really felt like having a professional writer summarize the text for me. This level of quality is perfect for businesses that need polished outputs without much tweaking—think marketing copy, customer-facing summaries, or even executive briefs.

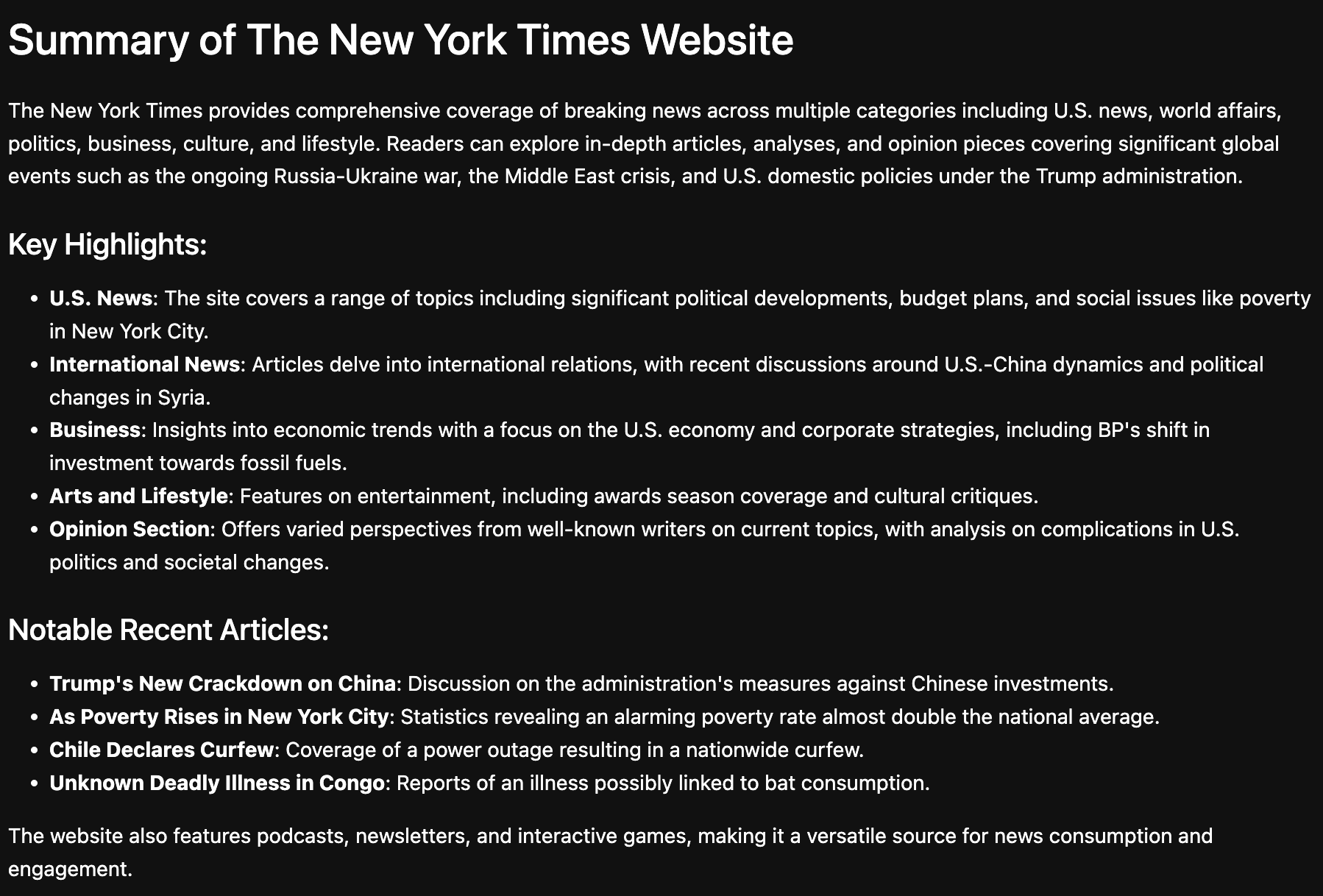

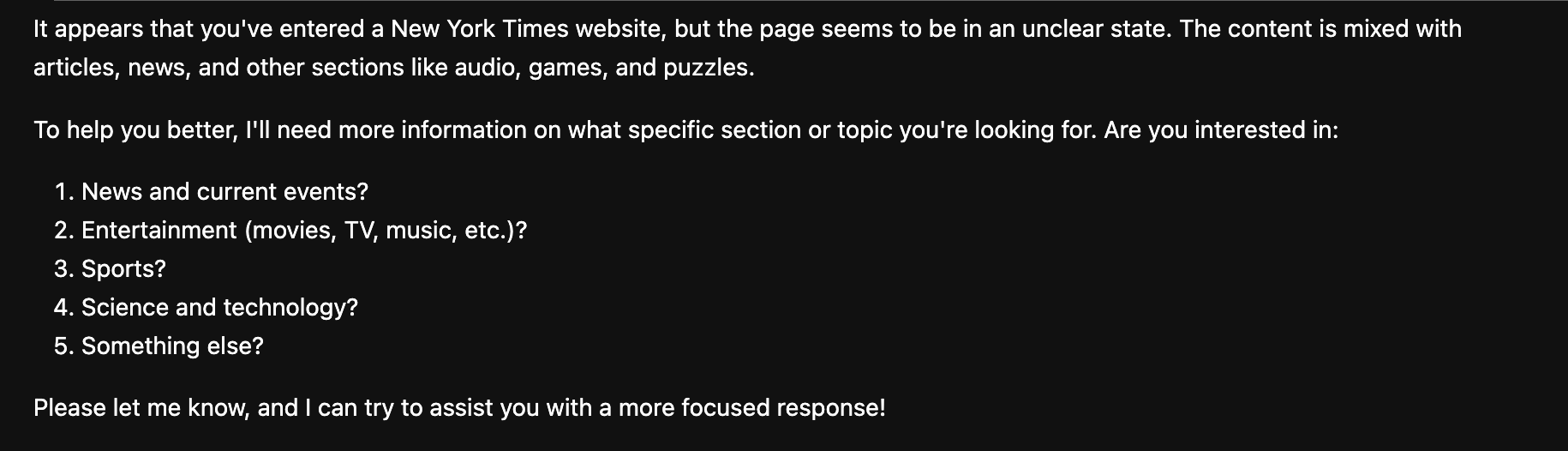

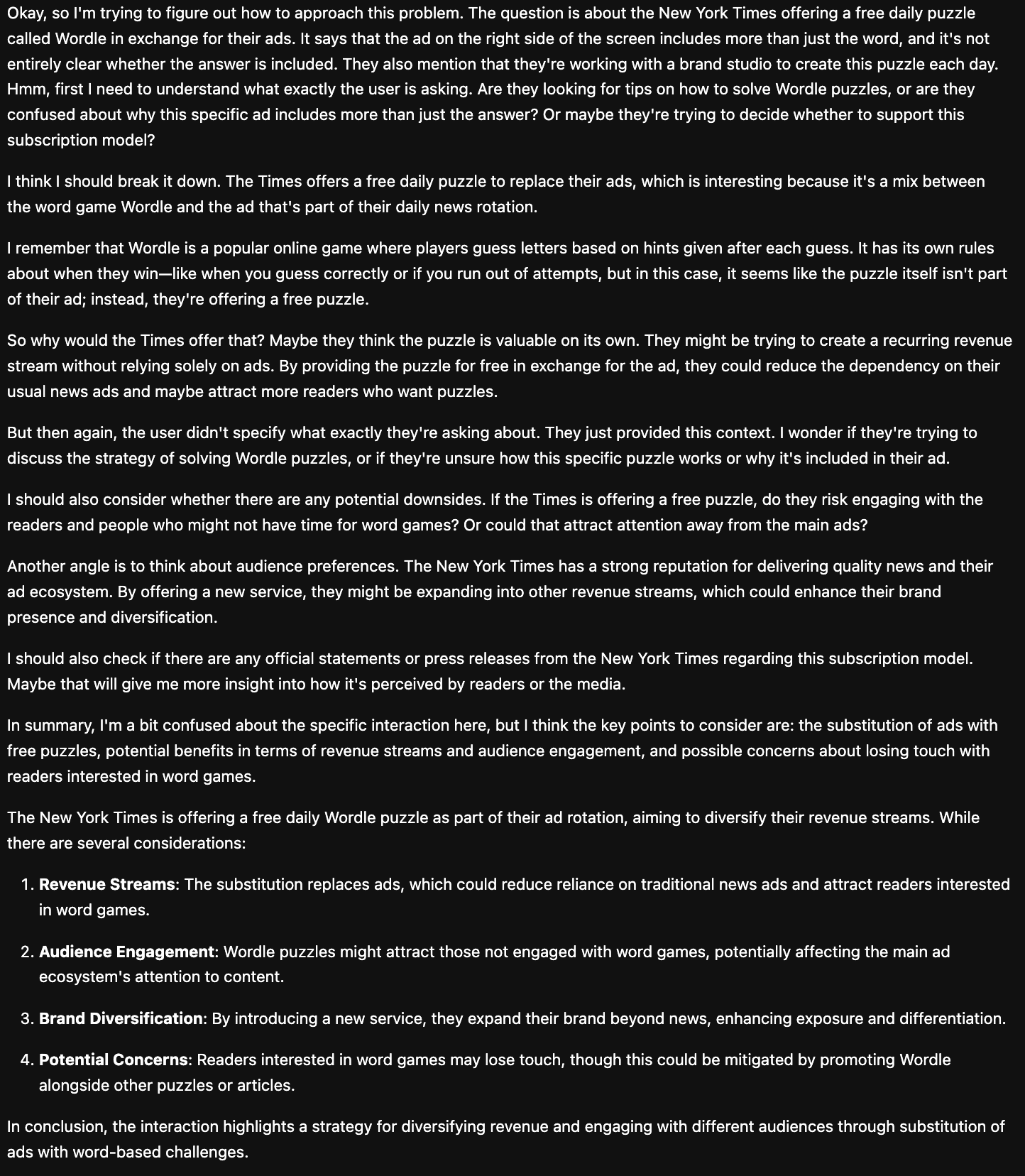

Below is an example of how differently the homepage of The New York Times can be summarized using various approaches. By scraping the homepage content, I experimented with generating summaries using local language models (LLMs) and OpenAI's GPT models. From summaries, you can see that OpenAI output is the most polished and easy to understand.

OpenAI (gpt-4o-mini model)

Local LLM (llama 3.2)

Local LLM (Deepseek-r1 1.5b)

Local models offer control

While local LLMs didn’t always match OpenAI’s level of fluency, they still produced decent summaries—and they came with the added benefit of control over data privacy and costs. For businesses handling sensitive information or operating in industries with strict data regulations (like healthcare or finance), running models locally could be a game-changer. I am also amazed that I am able to run these models with my years old Macbook (Intel Chip).

Cost vs Quality Trade-Offs

One thing that stood out was the trade-off between cost and quality. OpenAI’s pay-as-you-go pricing is convenient but could get expensive for high-volume tasks like summarizing hundreds of pages daily. Local models require upfront investment in hardware but could save costs in the long run if used extensively. This is something you should weigh carefully based on your needs.

How This Can Be Applied to Businesses

As I played around with these tools, I couldn’t help but think about all the ways businesses could use them:

- Content Summarization at scale: Imagine running a news aggregation site or managing a knowledge base—these tools can automatically summarize articles or documentation into digestible snippets for readers or employees.

- Customer Support: Summarizing long email threads or support tickets into actionable insights could save time for customer service teams.

- Internal Reporting: For companies drowning in reports and data, these models can generate concise summaries of key points, making decision-making faster.

- Marketing Automation: Use them to quickly generate summaries of blog posts, product descriptions, or campaign briefs for internal reviews or external audiences.

The possibilities are endless—it’s just a matter of identifying where automation can save time while maintaining quality.

Final Thoughts

This experiment was my first step into the world of language models, and it’s clear that both local LLMs and OpenAI GPT have huge potential for business applications. While I didn’t dive too deep into technical comparisons this time, what stood out to me is how accessible these tools are—even for someone just starting out—and how much value they can bring to real-world use cases.

If you’ve been thinking about exploring AI-powered tools for your business but weren’t sure where to start, I’d say just dive in! Whether you choose a local model or a cloud-based solution like OpenAI GPT, there’s so much you can do—even on day one!

I will start posting more here as I continue my journey into learning LLM Engineering!